Initially in previous versions(before 11g), Oracle Database started off with caching data block in memory, which inturn is used to construct results for user’s request. Even though data blocks are cached in memory, it should construct results from cache which takes from time, resource, locks (latches & enqueues) etc., Oracle has gone to next level of caching in 11g database. Effective from Oracle 11g, Oracle can cache result sets. Oracle can cache the results of both SQL & PL/SQL. In this article, I am going to discuss basics of SQL result cache and I am planning to write about PL/SQL result cache in future articles. Quite obvious, at the end of article, most people will feel that this is the most interesting performance tuning hints while working in Oracle 11g database.

Few basics about result cache

Oracle introduced the memory structure called Result Cache to store the results of SQLs & PL/SQL. The database serves the results for the executed SQL queries and PL/SQL functions from the result cache instead of re-executing the actual query, quite obvious this feature will increase the performance. Memory allocated(can be controlled using RESULT_CACHE_MAX_SIZE parameter) for the result cache is taken from the shared pool. By now one might come to the conclusion that result cache will improve the performance of the repeatly used PL/SQL & SQL. RESULT_CACHE_MAX_SIZE specifies the maximum amount of SGA memory (in bytes) that can be used by the Result Cache. If the value of this parameter is 0, then the result cache feature is disabled.

Here are some of the parameters that control the behaviour of the result cache.

SQL> col name format a30

SQL> col value format a25

SQL> select name,value from v$parameter where name like 'result_cache%';

NAME VALUE

------------------------------ -------------------------

result_cache_mode MANUAL

result_cache_max_size 5373952

result_cache_max_result 5

result_cache_remote_expiration 0

Brief description about the above parameters:

RESULT_CACHE_MODE: RESULT_CACHE_MODE specifies when a ResultCache operator is spliced into a query's execution plan. If set to FORCE, all the queries are cached if they are qualifed and fit in cache. The default is MANUAL, which indicates that only queries with the hint will be cached.

RESULT_CACHE_MAX_SIZE: Specified maximum size of the result cache. Remember, result cache is part of shared pool and maximum can be of 75% of shared pool size. Also query result.

RESULT_CACHE_MAX_RESULT: Specifies percentage of RESULT_CACHE_MAX_SIZE that any single query result set can occupy. (Just to prevent the big result set from throwing out all other small resultset).

RESULT_CACHE_REMOTE_EXPIRATION: Specifies the number of minutes that a cached result that accesses a remote object will remain valid. Setting this parameter to 0 implies that results using remote objects should not be cached. Setting this parameter to a nonzero value may produce stale answers (for example, if the remote table used by a result is modified at the remote database). I personally think it should be set to positive value if remote database is read only database.

From the below query result, it is evident that result cache is part of shared pool.

SQL> select * from v$sgastat where pool='shared pool' and name like 'Result%';

POOL NAME BYTES

------------ -------------------------- ----------

shared pool Result Cache: Memory Mgr 200

shared pool Result Cache: Bloom Fltr 2048

shared pool Result Cache: Cache Mgr 152

Like almost all memory structure in oracle instance, Result cache is also protected by latches. Latches, being serialization devices, are scalability inhibitors. Don’t just to come to conclusion that Latches are evil; afterall we expect database to maintain ACID property.

SQL> select * from v$latchname where name like 'Result Cache%';

LATCH# NAME HASH

---------- ------------------------------ ----------

377 Result Cache: Latch 1545889529

378 Result Cache: SO Latch 986859868

Oracle 11g provides couple of views to see the behavious/statistics of result cache. Click on the below view names to see the details in Oracle documentation.

APIs for the result cache management is available in PL/SQL package DBMS_RESULT_CACHE.

Couple of examples on how to use APIs on DBMS_RESULT_CACHE package.

SQL> set serveroutput on size 99999

SQL> exec DBMS_RESULT_CACHE.MEMORY_REPORT("TRUE")

R e s u l t C a c h e M e m o r y R e p o r t

[Parameters]

Block Size = 1K bytes

Maximum Cache Size = 5248K bytes (5248 blocks)

Maximum Result Size = 262K bytes (262 blocks)

[Memory]

Total Memory = 2400 bytes [0.001% of the Shared Pool]

... Fixed Memory = 2400 bytes [0.001% of the Shared Pool]

....... Cache Mgr = 152 bytes

....... Memory Mgr = 200 bytes

....... Bloom Fltr = 2K bytes

....... State Objs = bytes

... Dynamic Memory = 0 bytes [0.000% of the Shared Pool]

PL/SQL procedure successfully completed.

SQL> exec DBMS_RESULT_CACHE.flush;

PL/SQL procedure successfully completed.

DBMS_RESULT_CACHE package has lot more functions/procedures and if you are looking for more, login into 11g using SQL* Plus and execute “desc DBMS_RESULT_CACHE” and the documentation for the same can be found

here.

Background Process

SQL> select description from V$BGPROCESS where name like 'RC%';

DESCRIPTION

----------------------------------------------------------------

Result Cache: Background

Watch my blog to see result set in action.

As usual, comments are always welcome.

-Thiru

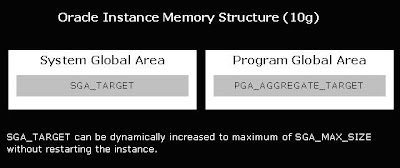

Before Oracle 9i, DBA has to maintain the whole bunch of memory parameters like DB_BLOCK_BUFFERS, SHARED_POOL_SIZE, set of PGA parameters *_AREA_SIZE,

Before Oracle 9i, DBA has to maintain the whole bunch of memory parameters like DB_BLOCK_BUFFERS, SHARED_POOL_SIZE, set of PGA parameters *_AREA_SIZE,